Audio is an often overlooked element of multimedia experiences

We think it’s time that changed. Here, creative technologist Danny Keig explores our latest experiments with interactive audio.

Over the last decade or so I have had the privilege to pass through a few professional fields. After working as a composer, performer, live and studio sound engineer, I found myself getting deep into programming and creative technology. Interactive audio is the melting pot of these overarching domains – technology and sound. It enables us to bring even more life to music and sound by facilitating seamless interplay between someone – or something – and a technological response. It allows for participants in an experience to feel like creators or instigators by opening up parts of the sound world for them to influence and control.

In my work here at S1T2, I’ve had a few notable opportunities to work with sound in new ways. As someone who has been on all sides of audio production, I’d like to revisit some of these projects and share my approach to sound. I’ll be exploring what we can achieve with audio in the world of interactive experiences, as well as dissecting decisions in audio programming, composition and sound design. Let’s see what sounds can do!

Why go thinking about audio?

Imagine watching any film in a cinema. If the sound was to be suddenly muted, or even turned down, the world you were experiencing would be completely lost. While it may not seem like it, it’s often said that we perceive changes and inconsistencies in audio much more quickly and precisely than we do with visual elements. When we take this concept into an interactive activation or exhibition, a static approach to sound (assuming we’ve been graced with sound at all) is going to leave us feeling disconnected, disconcerted or just plain disinterested.

Audio is important in interactive environments because of the role it plays in the immersive nature of an experience. It provides another avenue for interaction, one that is often not as widely explored. It can create strong feelings of an experience being organic or unpredictable, or provide another means for real time feedback to participant actions.

I believe that audio is still a sidelined element in many forms of media. This is particularly the case in interactive experiences, where the blueprints and outlines often rely heavily on the visually grandiose. The importance of sound comes to the forefront when dealing with mediums such as virtual reality (VR), where the virtual world closes in around you and creators are forced to deal with audio as a form of continuity. When it comes down to it though, for any multimedia experience – be it film, TV, games, or a physical installation – audio can and should carry at least 50% of the emotional response. The difficulty comes with how sneaky – and ironically silent – audio’s role can seem.

OK, I promise to think about it, but what does that look like?

There are countless ways to start thinking about sound. As Matthew Herbert suggests in the doco linked above, gone are the days of being restricted by technology. The possibilities are truly endless now. It is important then to focus on ideas, stories and the goal of creating unique experiences.

I’d like to talk about three individual projects in which the ideas and stories required an interactive audio component in order to execute the vision. I’ll reflect on how and why we chose to integrate sound into these projects, and how it contributed to the overall experience.

Caraoke Studio

One of the first projects I worked on at S1T2 was Kia Caraoke Studio, a unique and bizarre experience we built for the 2019 Australian Open. In this experience, a group of up to 3 people would hop into a car and sing Jet’s ‘Are You Gonna Be My Girl’, karaoke style. With some clever tech trickery, the built-in dashboard screen showed a live feed of the car, along with an AR tennis star sitting in the driver’s seat, singing along.

Kia Caraoke Studio

An interactive karaoke studio for tennis fans at the Australian Open.

The interactive audio component of this project was based on scoring both singing ability and enthusiasm. Here, the audio was vital to creating an engaging experience, helping to provide gentle visual cues as to what notes to sing and helping to translate that input into meaningful interactive elements, a live numeric score, and real-time visual feedback.

Interactive audio drives visual feedback

The focal point inside the car was the built-in dashboard screen. As the song played, visitors saw a scrolling piano roll animation indicating which notes were coming up. We then visualised real-time audio input as a tennis ball moving up and down on the screen, visually tracking the pitch of the participants’ voices. As the upcoming animated notes scrolled into view, the tennis ball moved up and down, hitting (or missing) each note as visitors sang and triggering further animations on notes that achieved autotune-like accuracy.

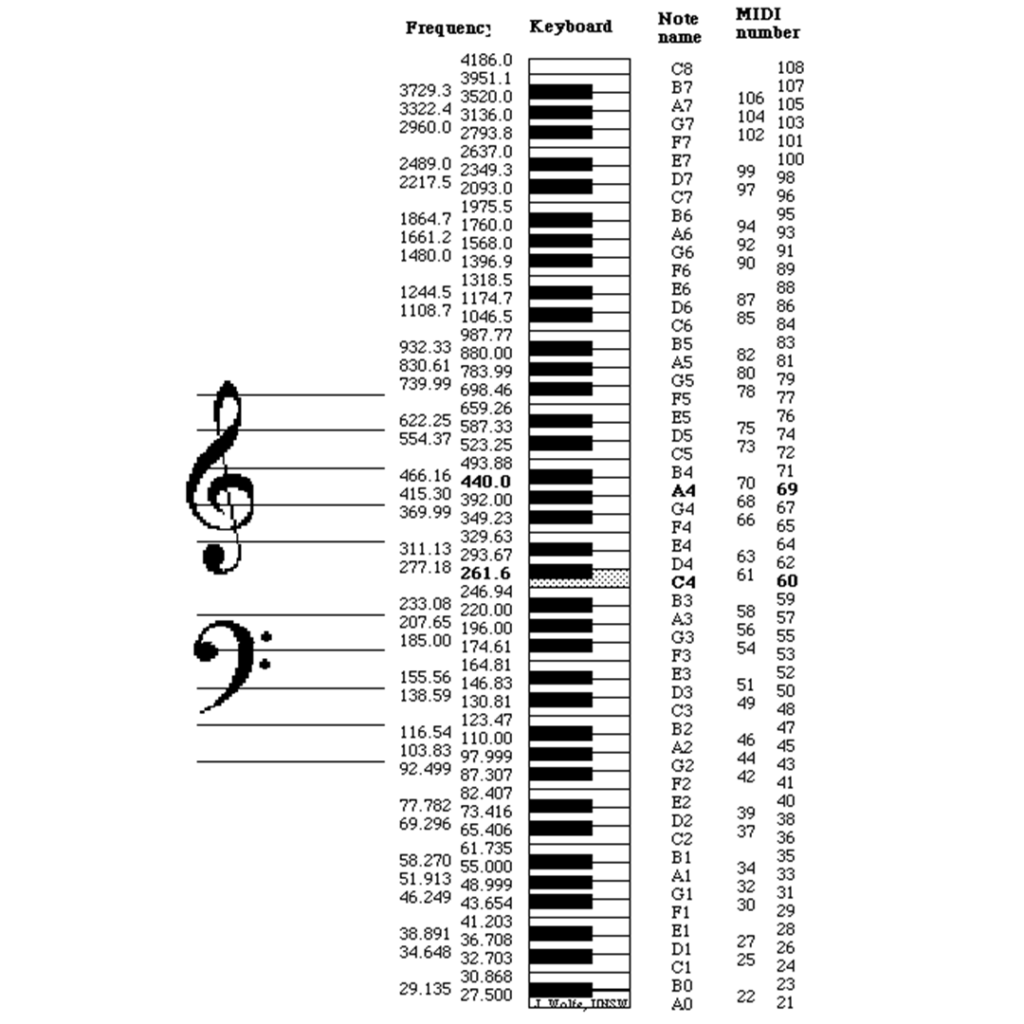

The technology behind the experience was openFrameworks, a C++ creative coding library which handled both the audio processing and UI elements. After capturing audio through the microphone, we translated frequency information into musical notes using an open source library called aubio. Using the tried and tested music technology, MIDI (Musical Instrument Digital Interface), we also mapped out the melody of the song beforehand, so openFrameworks knew which note to expect at any time in the song. From there, a simple tally system kept track of which notes the singers hit out of a total number of notes in the song.

Creating mood through smoothing

As any data wrangler knows, real-time streaming data often requires a lot of smoothing, and audio is no exception. Smoothing in audio land is important for multiple reasons. In terms of sound output, it’s all about interpolation – how we make audio edits, cuts, fades and transitions more graceful and unnoticeable. If we don’t deal with this we’ll be left with a sound that is jarring and distracting. As Radiohead producer Nigel Godrich often says to guitarist Jonny Greenwood, “That’s a great sound from Max, but could you get rid of the clicks”.

In regards to input, the focus is on taking what the microphone hears and turning it into useful data. For Caraoke Studio, smoothing input data wasn’t necessary for what we would hear, but it was useful in making the scoring algorithm more flexible and visual feedback more purposeful. In this case, some simple data averaging worked wonders, taking the last x number of data readings and averaging them, giving the illusion of silky smooth input data.

Having an average pitch for the last x samples also meant we could evaluate the stability of pitch, giving higher scores to participants with better voice control. Data smoothing here was even more important because the mood of the experience was a ‘carefree car sing along’ rather than an indoor karaoke night. With up to four people yelling into the mic at any one time, the algorithm’s confidence in the pitch was reduced, and data smoothing was very helpful in assessing the group’s pitch accuracy as a whole.

Data Heart

When it comes to interactive audio systems, it’s a lot more common to produce output through user actions, rather than dealing with sound inputs. Just a few months after Caraoke Studio wrapped, we had a go at this too in a large-scale interactive installation called Data Heart.

Data Heart

Interactive data visualisation breathes life into real-time transactions.

Located on a huge 9m x 6m LED screen in a New York corporate lobby, Data Heart is a captivating particle visualisation that helps visitors explore the vision and meaning behind our client’s data-driven technology company. With multiple interactive and ambient animation states, music and sound were vital in fusing the experience together and balancing different levels of user engagement.

Creating a dynamic sound world

Working with a music composition house already engaged by our client, the challenge was to develop their existing work into a breathing interactive soundscape. With many different installation states, a number of different triggers and cues, and a complex sound system to work with, there were many considerations. In order to treat sound more flexibly we pulled out Max, a graphical programming environment well suited to sound and interactivity.

Treating audio as a malleable medium, we split the existing composition into about 8 stems – individual tracks each representing a group of instruments (percussion or piano for example). By transitioning between different mixes of the stems, we could alter the mood of the space, creating sonic variation between a position near the screen and the big picture further back. We used these different mixes, along with various user triggers, to dynamically shift the whole soundscape through user positioning.

Cues + signposts of spatial audio

With a well specced sound system, we had a few unique opportunities to explore spatial sound. The sound system in the lobby was multichannel but also featured some frequency band isolation for pinpointing certain frequency ranges to distinct locations in the room. We used this to our advantage, particularly in signposting different states of the installation to visitors.

For example, we decided to program the experience’s welcome trigger to fire whenever a visitor passed by a defined post near the security desk as they entered. This resulted in a sound triggering almost exclusively in this location, while a transformation in on-screen animations drew attention to the experience and encouraged visitors to step closer.

Once visitors entered the interactive zone, they immediately triggered a dropping, plunging sound as the visuals transformed into an interactive state. The mix of music then changed, with lighter, more delicate instruments fostering a sense of intimacy and connection with the particles on the screen. Leaving the space also triggered an exit sound, which flowed back into the music’s ambient state with the full instrument mix.

We spent a great deal of time fine tuning every transition and action so that the sound never once took you out of the experience, and to ensure that each location in the room provided a balanced mix with no resonating frequencies. Due to the size of the lobby, we were even able to create the illusion that there weren’t speakers in the space, with sound instead seeming to emanate from the particles on the screen.

Translating into the sound world

As visitors entered the interactive zone, their silhouettes were represented on screen as a flowing flare of particles that subtly respond to their movement through visitor tracking. To further clue participants into the fact that their movements were being represented, we used this same data to generate sounds as visitors moved through the space. Walking, running or waving your arms would trigger a variation of organic chimes and pitched percussive sounds signalling that you are now part of the piece.

With such a large screen and floor space, we were able to represent the movements of up to twelve people through the space. To take advantage of this, we used every chance we could to create contrast between one user vs a group. By virtually mapping sound samples across the floor space with positional tracking data, we were able to create a 2D zoned sample field. That meant that simply walking through the interactive zone would create a blend of different textural sound design elements based on your position. This blend, when multiplied by the number of people in the zone, would create a subtle and unique sound collage.

This was a very subtle element in the experience, but it undoubtedly contributed to the sense of a group’s presence on screen and in the room. When so much of the underlying concept behind the experience revolved around connections, this was a nice addition to help visitors connect their presence and actions with the animations on the screen.

Throughout deployment of Data Heart, we were constantly surprised by how much audio added to the experience. It was that final bit of polish which tied the whole experience together, linking all the different interaction states and creating depth in the journey of moving throughout the space. The interactivity of the music also helped create an unmistakable emotional connection to the piece. Without this, I feel some of the scale and ambition of the project would have been diminished.

Beat the Beat

When the next opportunity to create sound arose – with our Beat the Beat dance experience for Kia at the 2020 Australian Open – we started looking at how we could iterate very quickly by keeping everything in-house and putting our collective experience to work. Having worn the hat of the audio programmer on these last projects, it was now my turn to compose for an interactive audio installation.

Kia Beat the Beat

Interactive dance floor brings the spirit of Kia to the Australian Open.

In the fashion of arcade dancing games of yore, we developed a modern, dance-based interactive experience to promote the Kia Seltos to tennis fans. This activation featured a large wall-mounted screen tracking an animated Kia Seltos as it drove through a vibrantly lit world, pulsing in time with electronic music. Players on a second, floor-mounted LED screen had to step on targets, score points, trigger musical snippets and create music on the fly.

Composing for interaction

My role in this project was focused solely on composing music that could be quickly integrated into a real-time game engine, providing that feeling of users actually creating the music live in the experience. I worked alongside S1T2’s real-time team who developed the game mechanics and visuals, and who integrated my music into the game.

Composing for Beat the Beat was essentially like composing game music. We were dealing with audio that could change at any moment, that needed to fit together coherently, and that had to provide cues and feedback for user actions. Unlike static music, interactive game music is often composed in chunks. These chunks rely on being musically compatible with many other chunks, and can therefore be looped, interchanged and mixed together all while maintaining a sense of musicality.

The composition became a balancing act between non-repetitiveness, a musically logical structure, a sense of contribution to the composition, and feedback on game performance. Two players could face off against each other and create a composition by stepping on virtual targets as they darted across the LED floor. Each player would also have their own unique lead instrument sound, which would be triggered whenever they hit the game markers. Thus, the experience was both competitive and collaborative in terms of making music together.

Designing audio so audiences can create music

One of the expectations of a musical game is that it is going to sound good. That doesn’t leave a huge amount of room for failure – though there definitely will be some. Players needed to feel like they were creating the song, and that they didn’t suck at it. As players stepped on their targets, we triggered a series of musical phrases that when linked together created a more complete musical picture. These snippets were kept very short and were constrained to certain sections of music so that they were guaranteed to sound decent no matter the skill level of the player.

With tempo-based games, one needs to be aware of how, when and where players can trigger notes. Too much freedom and it becomes difficult to maintain musicality. The challenge in this game was the free flowing nature of how notes entered and left the screen, and the freedom in where players could hit those notes both in physical space and in musical time. The solution to this was some careful quantisation of the input data – the timing equivalent to autotune.

In practice, this meant finding the right quantisation setting that gave a sense of musical skill and mastery of the system with no perceivable delay in hearing the phrases you just triggered. Quantise to a beat division that is too small and the response starts to feel musically sloppy and unruly, while a longer division creates more latency. We settled on quavers, or ⅛ of a beat, which provided just that right balance in response.

Variation is the name of the game

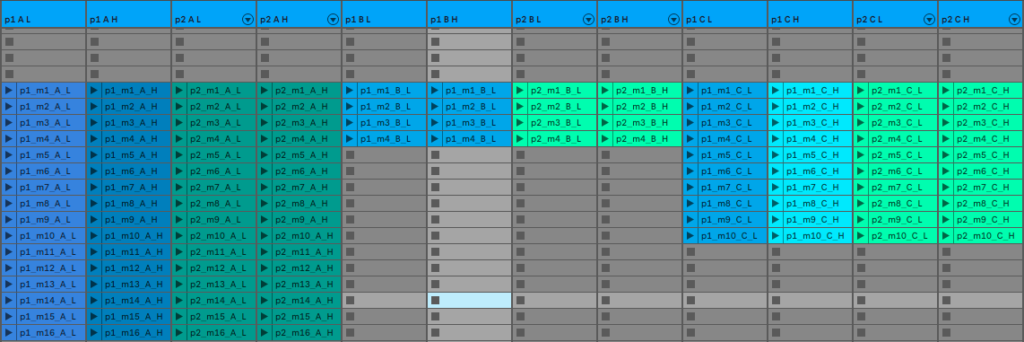

The success of interactive music in Beat the Beat was also tied to how it varied from start to finish and from play to play. We created stacks of different musical chunks that could be interchanged either randomly or according to an intensity curve within the game engine. For each level of intensity – low, medium and high – I composed variations of each musical loop, section, phrase, or note that could communicate a sense of growing energy.

To allow players to generate a melody themselves, we created shorter melodic phrases that, when triggered in a somewhat random succession, would create unpredictable yet musical results. The game engine would also provide two different modes depending on which player was performing better, and a third mode for when both players were scoring highly together. With each player’s lead instruments and melodies mixed to sound good either as a solo or as a duo, the game engine could pick from a pool of samples which reflected these game modes.

For all larger chunks of music, I composed variations for all levels of intensity, with the plan to crossfade between different levels based on how well players performed. The game engine would know that to make a musically logical piece, the song had to progress through certain sections in a certain order, while drawing from melody chunks that were allowed to be played in that particular section. Further variations were triggered by stepping on ‘power ups’ which could trigger additional layers of percussion or synths, or by unlocking completely different sections of music reserved for high scoring players.

Without interactive audio, this project would have died as soon as players realised that they had no impact on how the music sounds; that the song is the same on every play. It’s exciting to have been a part of a project where these concepts were present from the beginning of development and where the client was so open to exploring and pushing this.

What’s exciting in the future of interactive audio?

Having discussed where things are, it’s always nice to look at what might be coming – especially when the future of interactive audio is so bright. With so many tools to explore, I personally plan to delve into web audio next. As someone with a music and audio engineering background, who is now a part of the web team at S1T2, I may have an agenda, but I truly think web audio opens up many opportunities.

Web audio is open source, meaning it doesn’t rely on proprietary software and is free for anyone to use and contribute to. It runs in a browser on virtually any platform (both mobile and desktop), which opens up possibilities for thinking about how sound can be used in experiences. It also doesn’t rely on a highly specced machine – we’re already seeing how you can spread the CPU load over many devices, where each contributes something to the soundscape.

Tools for web audio are also ingrained in the web development community – one of the more diverse, democratic (every computer comes with a browser) and open communities in tech (though yes, it still has a long way to go). I hope that as the usage of web audio spreads, we’ll see strong development on this platform. While it may not yet have the computing crunch power of a low level programming language, the opportunities it provides are very much worth exploring.

In the future I also see more risks being taken with audio as a part of interactive experiences. I hope to see the gap closing between sound art and commercial activations, and encourage everyone to be more bold and exploratory with sound as 50% of an experience.

My number one dream for audio though is that there is a growing willingness to budget for generative audio as part of physical installations. This is where the combination of technology, artistry, interaction and emotion all come into play and deliver something organic, lively, unpredictable and non-repetitive. It allows for data capture in the installation space, through sensing or even other simple factors such as time of day, to influence and drive the direction of the sound and music and to deliver something truly site specific and bespoke.

Next time you begin planning a multimedia project, I encourage you to put sound on the map. By allowing audio to take an active role, allowing it to feed off user inputs and actions and committing to a soundscape that is not static by design, I hope you start to experience sound in new light.